Using data to gauge progress, make decisions and inform our course of action is the best way to ensure success during digital transformations and software implementation projects. But not all data is created equal—so how do you know the difference? (Check out the full podcast at the end of this article or on the CloudWars website here.)

“There are (3) kinds of lies—lies, damned lies and statistics.” – Benjamin Disraeli

Vanity metrics are data that make a subject look good but do not help you understand performance in a way that informs future strategies. They’re easy to come by, simple to understand and cheap. You’ll see them used every time you read an ad, listen to a sales pitch about a digital transformation or political speech, or tune into a quarterly investor call. Because of their easy availability and promote-ability, they’re something like junk food—tasty but not really healthy. In the world of Enterprise Software, they’re metrics such as self-reported customer satisfaction statistics (“97%!”), number of clients (“10,000+!”), and run rate (EBITDA, revenue, etc.). These are tried-and-true crowd-pleasers, but don’t necessarily tell you the whole story behind a vendor or provider and certainly shouldn’t be the critical data which upon important decisions should be made.

Are all vanity metrics bad? Can they be dangerous?

No. All legitimate data can tell a part of the story, and they can be used to illustrate the positives. Since they are easy to collect and put into chart form (“executive eye candy” as they say) they can be used as leading indicators to larger goals. For instance, for a software project, the rate of user login creations or sign-ups is a leading indicator of user adoption. However, if you don’t measure return visitors or look specifically at the type of activity you have, it won’t get you to your adoption goal. If you’re selecting a new software and looking at the number of customers a vendor has, or not validating how those customers are using the system you’re about to purchase, those metrics won’t help you. Likewise, a vendor’s run rate and profitability might be impressive, but if their customer and employee churn is high—those glitzy performance stats are likely to dull in the future. When vanity metrics are used without looking at the larger picture, they can lead to confirmation bias and poor decision-making.

So, how do you evaluate metric legitimacy? How do you spot shallow metrics?

There are (2) major tells when looking at metrics. #1 – who’s measured it and how was the measurement done? and #2 – why does this metric matter? Or, more importantly, what end goal is the metric measuring toward?

Who is delivering the metric is important. Self-reported metrics aren’t as objective as independent or externally-validated metrics. Even better are externally-validated metrics from the customer perspective (which, is what Raven Intelligence is all about). It’s also important to know how the data was collected and how the data point is defined. Last week, a very well-known software vendor boasted a “97% on-time software deployment” metric in an analyst call. That was particularly difficult to believe as Enterprise Software projects even in the best of situations average around 60% on-time (and from a customer perspective, the Raven Intelligence for this vendor is around 67% on-time). In this case, it would have been helpful also to understand how the metric “on-time” was defined. If “on-time” was based upon a moving target date, that 97% might be true.

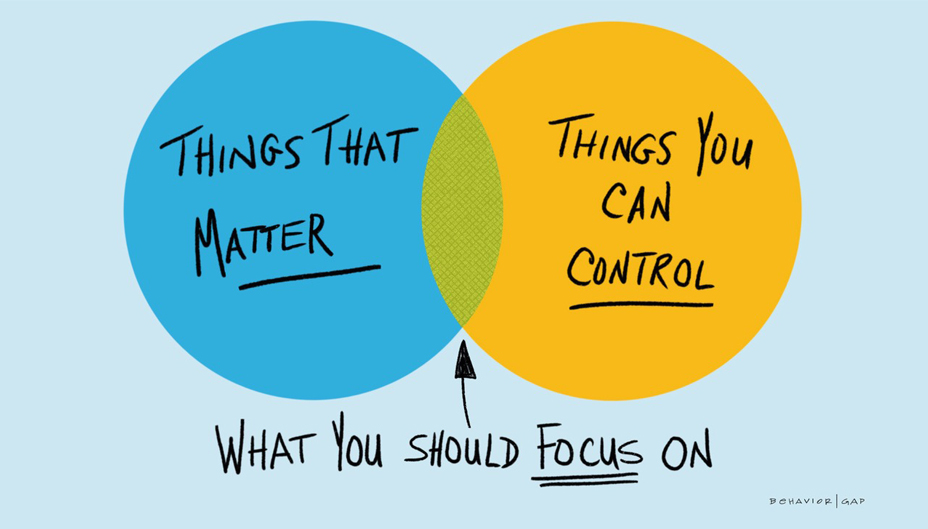

The second test is to question and validate why a metric is being used. What is the purpose in reporting on a metric? Is it to share good news and make people feel good about progress? If so, that is great—metrics are powerful when used to inspire and recognize achievement. But, if a metric is being used to inform and create action and set a course—you want to make sure it tells a complete story. Actionable metrics are those that you can tie back to a business objective or purpose and that correlate to more than a single area. For instance, in the “on-time” delivery example that was just used—how does that metric correlate to overall project success and impact? Are there other metrics that correlate as well (such as “on-budget delivery” / “rate of team change”, etc.)? All of those types of metrics are much more difficult to measure, but tell a much more complete (and credible) story of success or failure.

What are the metrics that matter in digital transformation?

If you’re in the midst of a digital transformation within your organization, you’ll probably like metrics coming from everywhere. While you’ll want to ensure the milestone phases of your project stay on track (vanity metrics are very helpful here!), you want to make sure these metrics tie to the best few “north star” metrics which ultimately measure the business value delivered. These are data sets that will show revenue improvement, cost reduction or working capital improvement. These will ultimately help you gauge the return on investment of both your time and money. Here’s a short list and a link to a podcast that was done on this topic as well.

Metrics that matter most:

1. Business Value Delivered (1. revenue improvement, 2. cost reduction, or 3. working capital improvement)

2. Stakeholder Adoption. Are my users actually using the system for the purpose intended?

3. Customer Experience (measured by CSAT and churn or stakeholder satisfaction if an internal initiative—e.g. employee experience)

“If your digital transformation initiatives don’t improve the quality of the customer experience, are they really meaningful at all?”

**There is a difference between Customer Service & Customer Experience… High degree of customer service doesn’t guarantee a positive overall customer experience.

4. Engagement between business team & IT

The best metric, especially during the first year of a transformation, is to measure the engagement level from all team business and IT team members.

If engagement is high, you have the foundation upon which to be successful. If engagement is low, your transformation is flawed, and failure is likely.

5. System Uptime and Availability (How difficult is this thing to support? Have I customized a system that will be difficult to maintain over time?)

Availability and efficiency of internal-facing applications significantly affects workforce productivity, while the availability and efficiency of external-facing applications greatly impacts external stakeholders, particularly customer experience, satisfaction, and retention.

Final thoughts

If you want change that is more than skin deep, you’re going to have to measure and use more than vanity metrics. It might take longer to vet and collect, but in the end you’ll have real data that can show authentic progress and help you ensure a more solid future state.